Subjective or inconsistent tagging of attributes like style, material, and fit.

Models struggle to capture sleeves, collars, and other garment components.

Lighting, angles, and resolution differ between studio and real-world images.

Material properties like sheen, weave, or patterns are often missed.

Visual, text, and structured data are difficult to align effectively.

Manual labeling is costly, time-consuming, and error-prone.

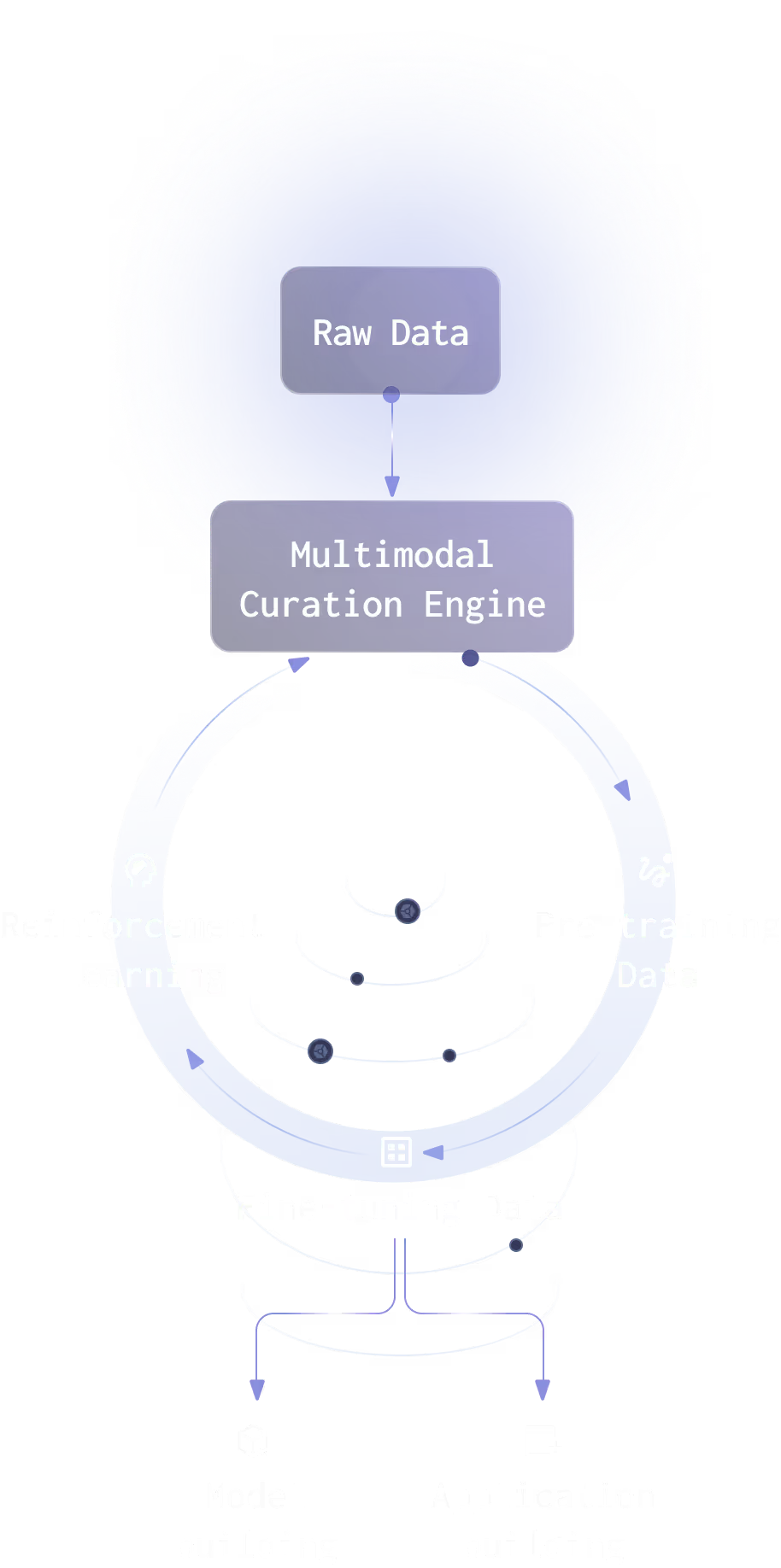

Fine-Grained Semantic Pairing Engine

Enhanced Pose & Region Annotation

Fabric Simulation & Rendering Multimodal Knowledge

Multimodal Knowledge Graph Creation

Graph Intelligent Dataset Augmentation